Prompt Engineering's Dark Side: Addressing the Challenges of Bias and Misinformation

Prompt engineering has emerged as a powerful discipline, transforming how we interact with and extract value from large language models (LLMs). It's often lauded for its ability to unlock unprecedented capabilities, from generating creative content to automating complex tasks. However, beneath the surface of this innovation lies a less-discussed reality: the potential for prompt engineering to inadvertently amplify existing biases and even facilitate the generation of convincing misinformation. While much focus is placed on optimizing outputs, it's equally critical for developers, researchers, and ethical AI advocates to understand and address the inherent risks.

This article delves into the "dark side" of prompt engineering, exploring how seemingly innocuous prompts can lead to skewed, unfair, or outright false AI-generated content. We'll examine the mechanisms behind bias amplification and misinformation generation, and crucially, discuss actionable strategies for robust prompt engineering bias mitigation. Our goal is to equip you with the knowledge to build more responsible, ethical, and trustworthy AI systems, moving beyond mere optimization to true societal impact.

Bias Amplification in Prompt Engineering

Large Language Models are trained on vast datasets scraped from the internet, which inevitably contain the historical and societal biases present in human language and culture. While prompt engineering is often seen as a tool to steer models away from these biases, it can, paradoxically, become a conduit for their amplification. This isn't always intentional; often, it's a subtle consequence of how prompts are framed and interpreted by the underlying AI architecture.

How Prompts Can Exacerbate Existing Biases

Consider a prompt that asks an AI to "describe a successful CEO." If the training data disproportionately associates leadership roles with a specific gender or ethnicity, even a neutral prompt can lead to a biased output. The model, in its attempt to be "helpful" or "realistic" based on its training, might generate descriptions that are predominantly male, Western, or able-bodied. This is where the challenge of AI bias truly manifests.

- Stereotype Reinforcement: Prompts can inadvertently trigger and reinforce societal stereotypes. For instance, asking for "nurses" might consistently yield female images or descriptions, while "engineers" might predominantly be male. This isn't just about gender; it extends to race, socioeconomic status, age, and other protected characteristics.

- Underrepresentation: If a prompt is too narrow or prescriptive without explicit instructions for diversity, it can lead to the underrepresentation of certain groups. For example, a prompt asking for "famous scientists" might overlook significant contributions from non-Western cultures or marginalized communities if the training data is skewed.

- Cultural Homogenization: Prompts can inadvertently push LLMs towards generating culturally homogenous content, especially when dealing with topics like traditions, art, or cuisine. This can erase the rich diversity of global cultures, defaulting to the most prevalent or well-represented examples in the training data.

- "Prompt Injection" of Bias: Malicious or even carelessly constructed prompts can be used to elicit biased responses from the AI. By subtly embedding prejudiced language or leading questions, a prompt can manipulate the model into generating discriminatory or unfair content, showcasing a significant LLM limitation.

The core issue here lies in the fact that LLMs are statistical models, not sentient beings with inherent ethical understanding. They reflect the patterns in their training data. When prompts are not carefully crafted to counteract these patterns, they can act as an accelerant, making the implicit biases of the data explicit and amplified in the output. This demands a proactive approach to prompt engineering bias mitigation.

Misinformation Generation Through Prompts

Beyond bias, another critical concern is the potential for prompt engineering to facilitate the generation and dissemination of misinformation. LLMs are known to "hallucinate" – generating factually incorrect or nonsensical information with high confidence. While this can be a simple error, it becomes dangerous when prompts are designed, intentionally or unintentionally, to produce misleading content.

The Mechanics of AI-Generated Misinformation

The very strength of LLMs – their ability to generate coherent and contextually relevant text – becomes a vulnerability when it comes to factual accuracy. When a prompt is ambiguous, asks for information outside the model's knowledge base, or is designed to elicit a specific (and false) narrative, the AI will often "fill in the blanks" with plausible-sounding but incorrect details. This is a core challenge in ensuring trustworthy AI outputs.

- Factually Incorrect Narratives: A prompt asking an LLM to "explain why X conspiracy theory is true" will likely generate a coherent, albeit false, explanation. The model doesn't verify facts; it generates text that fits the pattern of the prompt. This can be used to create convincing fake news articles, misleading social media posts, or even fabricated historical accounts.

- "Confabulation" and Fabricated Details: If a prompt asks for specific details about a non-existent event or person, the AI might invent names, dates, and locations. This can be particularly problematic in domains requiring high factual accuracy, such as legal, medical, or financial advice.

- Malicious Prompt Injection: Similar to bias amplification, malicious actors can use sophisticated prompt injection techniques to force an LLM to generate harmful misinformation. This could involve bypassing safety filters or exploiting vulnerabilities in the model's understanding to create content that promotes hate speech, scams, or political disinformation.

- Source Attribution Issues: LLMs typically don't cite their sources in the way a human researcher would. When prompted for information, they synthesize knowledge from their training data. This lack of transparency makes it difficult for users to verify the accuracy of the generated content, increasing the risk of misinformation propagation.

The danger here is magnified by the scale and speed at which LLMs can generate content. A single malicious prompt could theoretically be used to create thousands of pieces of false content, overwhelming traditional fact-checking mechanisms and eroding public trust in information. This necessitates robust strategies for prompt engineering bias mitigation that also address factual integrity.

Mitigation Strategies and Best Practices

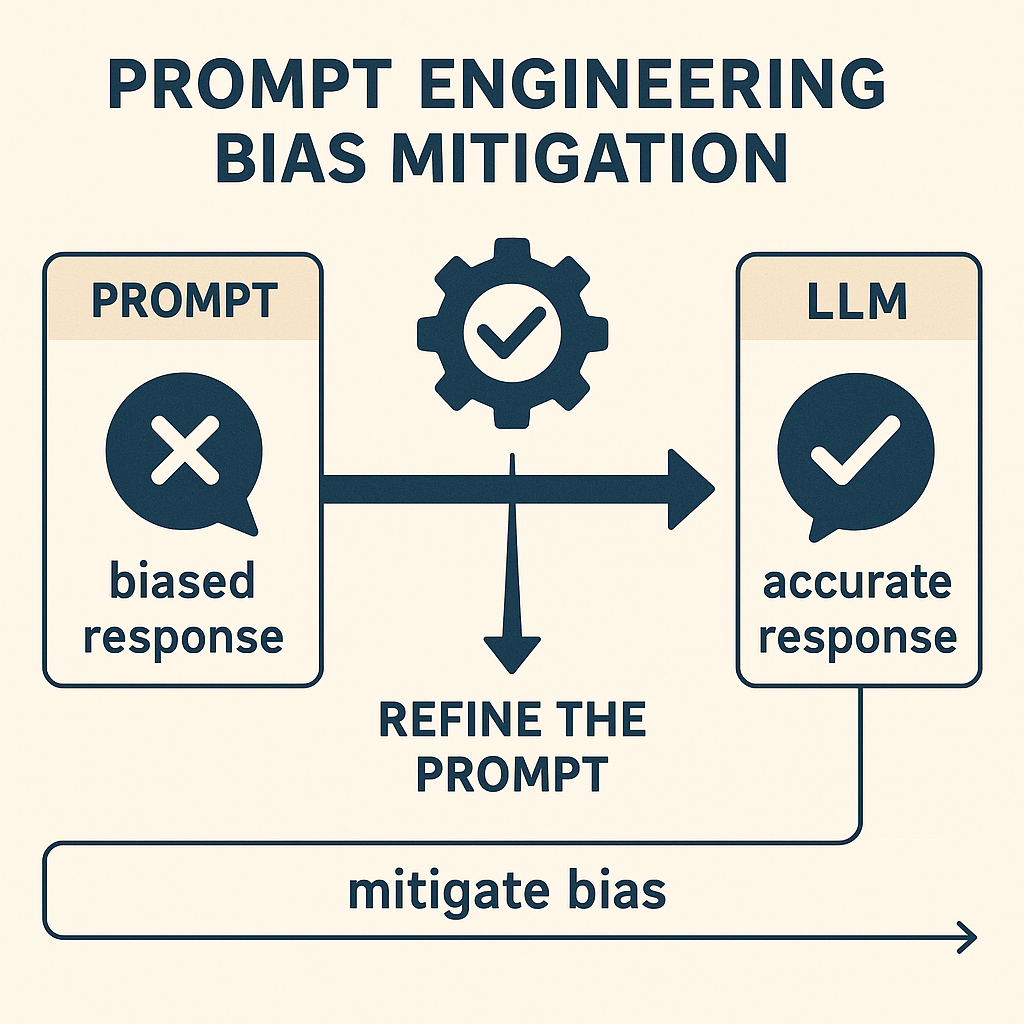

Addressing the challenges of bias and misinformation in prompt engineering requires a multi-faceted approach. It's not just about crafting better prompts, but also about understanding model limitations, implementing technical safeguards, and fostering a culture of responsible AI development. Effective prompt engineering bias mitigation is a continuous process.

Crafting Ethical and Robust Prompts

The first line of defense lies in the prompts themselves. Thoughtful prompt design can significantly reduce the likelihood of biased or misleading outputs.

- Explicitly Request Diversity and Inclusivity: When asking for examples or descriptions involving people, explicitly instruct the AI to include a diverse range of demographics (gender, ethnicity, age, background, etc.). For instance, instead of "describe a programmer," try "describe a diverse group of programmers."

- Specify Neutrality and Objectivity: Add instructions like "ensure the response is neutral and objective," "avoid stereotypes," or "present all sides of the argument fairly." While not foolproof, these directives can guide the model towards less biased outputs.

- Use Structured Prompting Techniques: Research suggests that structured prompting can help mitigate bias. Techniques like zero-shot, few-shot, and chain-of-thought prompting can guide the model's reasoning process more effectively, making it less likely to fall back on biased heuristics.

- Contextualize and Constrain: Provide ample context and define boundaries for the AI's response. For factual queries, instruct the model to "only use information from provided sources" or "state if information is speculative."

- Iterative Refinement: Treat prompt engineering as an iterative process. Test prompts, analyze outputs for bias or misinformation, and refine them. This feedback loop is crucial for continuous improvement.

Technical and System-Level Interventions

Prompt engineering alone cannot solve all problems. Broader technical interventions are necessary for robust AI ethics.

- Bias-Aware Fine-Tuning: For specific applications, fine-tuning an LLM on a carefully curated, de-biased dataset can significantly reduce inherent biases. This requires meticulous data curation and potentially adversarial training techniques.

- Fact-Checking Integration: Integrate external fact-checking APIs or knowledge bases into the AI system. Before outputting information, the system can cross-reference it with verified sources.

- Confidence Scoring: Implement mechanisms for the AI to express its confidence level in a generated statement. High-stakes applications should flag low-confidence responses for human review.

- Explainable AI (XAI) Components: Develop components that can explain *why* an AI generated a particular response. Understanding the model's reasoning can help identify and correct biased or erroneous pathways.

- Safety Filters and Guardrails: Implement robust content moderation and safety filters that detect and block harmful, biased, or misleading outputs before they reach the user. These filters should be continuously updated and refined.

While prompt engineering can steer AI behavior, it's important to recognize its limitations. As the Boston Institute of Analytics notes, prompt engineering is not a foolproof solution; biases in training data can still influence output, necessitating a combination of strategies.

Auditing and Monitoring for Bias

Even with the best prompt engineering and system design, biases and misinformation can emerge or resurface. Therefore, continuous auditing, evaluation, and monitoring are indispensable components of a comprehensive prompt engineering bias mitigation strategy.

Pre-Deployment Auditing and Red Teaming

Before deploying an AI system, rigorous testing is essential to identify potential vulnerabilities.

- Bias Detection Tools: Utilize specialized tools and frameworks designed to detect various forms of bias in AI outputs. These tools can analyze generated text for gender, racial, or cultural stereotypes, and fairness metrics (e.g., demographic parity, equalized odds) can be applied.

- Controlled Input Studies: Conduct systematic tests with controlled input prompts that target sensitive attributes. For example, provide identical prompts but vary the gender or racial identifiers to see if the AI's response changes in a biased way.

- "Red Teaming" Exercises: Assemble teams specifically tasked with trying to "break" the AI system, i.e., to elicit biased, harmful, or misleading responses. As detailed in research from arXiv on addressing bias and misinformation, red teaming involves diverse evaluation techniques and adversarial prompting to uncover hidden biases and vulnerabilities. This adversarial testing helps identify edge cases and unexpected behaviors.

- Human-in-the-Loop Review: For critical applications, integrate human review processes for a subset of generated content. Human evaluators can identify subtle biases or factual errors that automated tools might miss.

Post-Deployment Monitoring and Feedback Loops

The work doesn't stop once an AI system is deployed. Continuous monitoring is vital to catch emergent issues.

- Real-time Output Analysis: Implement systems to continuously monitor AI outputs for patterns indicative of bias or misinformation. This could involve anomaly detection algorithms or keyword flagging.

- User Feedback Mechanisms: Provide clear and accessible channels for users to report biased, inaccurate, or harmful AI-generated content. This user feedback is invaluable for identifying issues in real-world scenarios.

- Regular Re-evaluation: Periodically re-run comprehensive bias audits and red teaming exercises, especially after model updates or significant changes in usage patterns.

- Transparency in Performance Metrics: Be transparent about the system's performance on fairness and accuracy metrics. This fosters trust and accountability among users and stakeholders.

By establishing robust auditing and monitoring frameworks, organizations can proactively identify and address issues, ensuring their AI systems remain aligned with ethical guidelines and societal values. This iterative process of detection, analysis, and remediation is crucial for maintaining responsible AI systems.

Future Research Directions

The field of prompt engineering and AI ethics is rapidly evolving. While significant progress has been made in prompt engineering bias mitigation, there are still many open questions and areas ripe for further research and innovation. The challenges of LLM limitations, particularly concerning bias and misinformation, demand ongoing attention.

Advancements in Model Architectures and Training

Future research will likely focus on developing inherently less biased and more factually grounded LLMs.

- Bias-Resistant Architectures: Exploring new model architectures that are less susceptible to inheriting and amplifying biases from training data. This could involve novel attention mechanisms or regularization techniques.

- Curated and Synthetic Datasets: Moving beyond passively scraping the internet to actively curating and synthesizing datasets that are balanced, diverse, and factually accurate. This is a massive undertaking but crucial for long-term solutions.

- Reinforcement Learning from Human Feedback (RLHF) for Ethics: While RLHF is used for alignment, further research can focus on specifically optimizing models for fairness, safety, and factual accuracy through targeted human feedback loops.

- Federated Learning for Fairness: Investigating how federated learning approaches can be used to train models on diverse, decentralized datasets without centralizing sensitive information, potentially leading to more robust and less biased models.

Enhanced Tools and Frameworks for Prompt Engineering

The tools and methodologies for prompt engineering themselves will continue to advance, offering more sophisticated ways to manage bias and misinformation.

- Automated Prompt Optimization for Fairness: Developing AI tools that can automatically analyze and suggest modifications to prompts to reduce bias and improve factual accuracy, based on predefined ethical guidelines.

- Formal Verification of Prompt Safety: Research into formal methods to mathematically prove the safety and fairness properties of prompts, especially in high-stakes applications.

- Multi-Modal Prompting for Robustness: Exploring how combining text with other modalities (e.g., images, audio) in prompts can lead to more nuanced understandings and reduce the likelihood of biased or misleading outputs.

- Dynamic Prompt Adaptation: Developing systems that can dynamically adjust prompts based on real-time feedback, user context, and observed biases, ensuring continuous ethical alignment.

Regulatory Frameworks and Industry Collaboration

Beyond technical solutions, the future of AI ethics and responsible AI also hinges on broader societal efforts.

- Standardized Ethical Guidelines: Developing universally accepted standards and benchmarks for AI fairness, transparency, and accountability, guiding both prompt engineering and model development.

- Cross-Industry Collaboration: Fostering collaboration among technology companies, academia, policymakers, and civil society organizations to share best practices, research findings, and mitigation strategies.

- Public Education and Literacy: Educating the public about the capabilities and limitations of AI, including the potential for bias and misinformation, is crucial for fostering critical engagement and responsible use. This includes understanding how to choose the best AI chatbot for specific tasks and recognizing their inherent characteristics.

As we anticipate the advancements of models like GPT-5 and beyond, it becomes even more critical to embed ethical considerations at every stage of AI development and deployment. The future of AI relies on our collective commitment to addressing these challenges head-on.

Prompt engineering, while a powerful enabler of AI capabilities, is not immune to the pervasive challenges of bias and misinformation. Its "dark side" demands our vigilant attention. As we've explored, prompts can inadvertently amplify existing societal biases embedded in training data and even facilitate the generation of convincing, yet false, narratives. This isn't merely a technical glitch; it's a profound ethical dilemma that can erode trust, perpetuate inequalities, and undermine informed decision-making.

However, understanding these challenges is the first step towards overcoming them. By adopting a proactive and multi-layered approach to prompt engineering bias mitigation, we can steer AI development towards a more responsible future. This includes meticulously crafting ethical prompts, implementing robust technical safeguards, and establishing continuous auditing and monitoring processes. It requires a commitment to transparency, accountability, and an ongoing dialogue about the societal impact of AI.

The journey towards truly responsible AI is a collaborative one. It calls upon developers to be mindful of their prompt design, researchers to innovate new mitigation techniques, and ethical AI advocates to champion fairness and truth. Let us embrace the power of prompt engineering not just for efficiency and creativity, but for building AI systems that are equitable, accurate, and ultimately, beneficial for all of humanity. The future of AI depends on our collective dedication to illuminating and conquering its darker aspects.